About Me

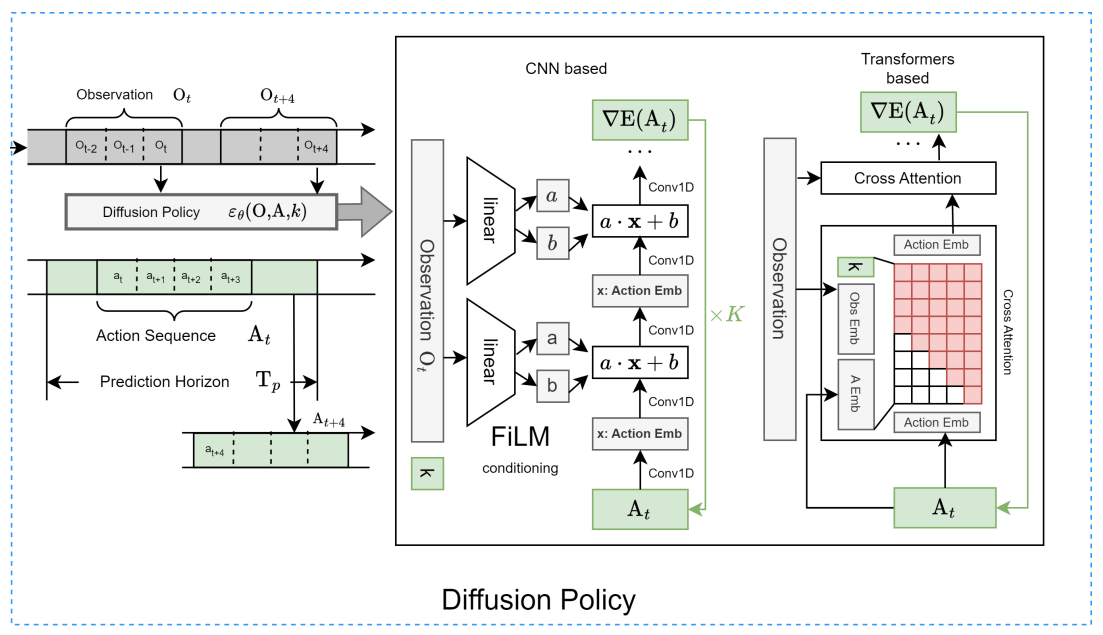

I'm Sabariswaran Mani (Sabarish), a fourth-year undergraduate at the Indian Institute of Technology, Kharagpur. My interests lie in computer vision and robotics, with a focus on autonomous ground vehicles and generative imagery. I'm passionate about bringing these technologies to real-world applications. Beyond research, I have a long-standing love for travel—scroll down to see some of my adventures!

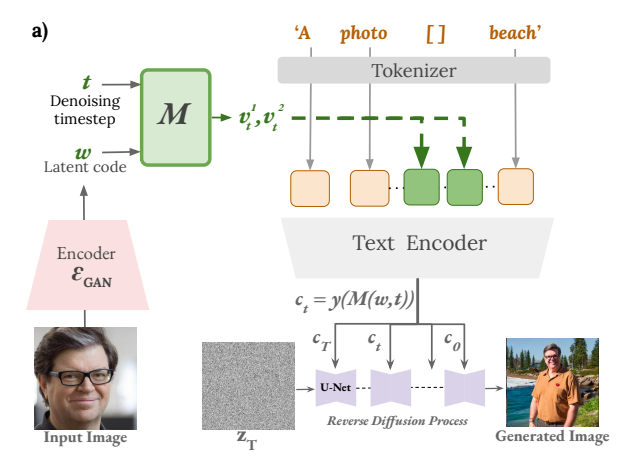

Currently, I am a researcher at the Vision & AI Lab, IISc Bangalore, under the guidance of Prof. Venkatesh Babu, where I explore diffusion models and their applications. I'm also a member of the Autonomous Ground Vehicles Research Group and Quant Club at IIT KGP. Beyond research, I enjoy football, computer games, and a good plate of parotta.